Daniel Kahneman - “Thinking, Fast and Slow”

While it starts somewhat cringeworthy and could have easily been three times shorter, I still found it a great read in the end. I won’t be making a thorough summary as it appears Wikipedia’s got a very good one. I’ll focus on the things that made the biggest impression on me.

The book’s central idea is that humans are inconsistent in their decision making and behavior due to the existence of a few dualisms within humans:

- System 1 vs System 2;

- the Experiencing Self vs the Remembering Self.

He contrasts complex and sometimes unpredictable (irrational?) humans with fictitious “econs”, who are the simplified predictable agents of economic models. Unlike econs, humans cannot “think statistically”, and thus don’t always choose what’s best for them, don’t always behave rationally, don’t always answer the question that was asked, and sometimes consistently prefer worse experiences over better ones.

System 1 and System 2

This is oddly reminiscent of Jaynes’ bicameral mind. There’s an intuitive, instinctive, fast System 1 in our brains that does most of the operational duties of the mind. There’s also a rational, cautious, slow System 2 that with effort and focus can achieve new and complex goals, overcome surprising obstacles, or find new meaning.

Kahneman gives plenty of examples where people think that they’re rational and logical but actually use their System 1 and through it make decisions that aren’t either rational or logical. We tend to jump to conclusions, we don’t know what we don’t know ("What you see is all there is"), we quickly substitute hard questions with easier questions in our heads and answer those, we have biases, we’re prone to anchoring effects, framing effects, availability heuristics, attribute substitution, planning fallacy, pervasive optimistic bias, but also loss aversion, and the sunk cost fallacy.

When System 2 is at a task, you can observe your pupils to dilate. Kahneman then calls System 2-specific tasks “pupil-dilating tasks”. System 2 easily gets tired so people often substitute a hard task with an easier one to decrease mental effort.

Fortunately, sometimes this isn’t the case. Mihaly Csikszentmihalyi researched the state of flow which allows for what’s perceived as effortless concentration where you lose sense of time. It’s called the “optimal experience” due to how joyful and compelling it is.

What you see is all there is

People make decisions as if they knew the entire picture. They don’t. Even when they think they know what they don’t know, that’s still not the entire picture. There’s a lot that they might not know that’s beyond what they ever suspected might have been in the realm of possibility.

This often makes decisions myopic, but worse yet, makes hindsight about the decision myopic as well. Kahneman calls this “what you see is all there is”. Overwhelmingly the data in front of us present themselves as the most important. The most treacherous thing about this effect is that it’s often invisible to us. We make blind decisions not even realizing that we didn’t have all the facts. Or, when faced with a hard dilemma, our System 1 often readily swaps it out for a simpler analogue that is easier to solve but not at all equivalent.

System 1’s tendency to only value what’s in front of it, plus its ability to invisibly substitute hard questions for ones that can be answered immediately, confuses our perspective and makes it hard to prioritize in life. Kahneman says:

Nothing in life is as important as you think it is when you are thinking about it.

I made myself smile and I’m actually feeling better

This is due to priming which is a mechanism of the “associative machine” inside our brains. For example, if the idea of EAT is currently on your mind (whether or not you are conscious of it), you will be quicker than usual to recognize the word SOUP when it is spoken in a whisper or presented in a blurry font.

Psychologists think of ideas as nodes in a vast network, called associative memory, in which each idea is linked to many others. There are different types of links: causes are linked to their effects, things to their properties, things to the categories to which they belong. In "An Enquiry Concerning Human Understanding" Hume reduced the principles of association to three: resemblance, contiguity in time and place, and causality. In the current view of how associative memory works, we no longer think of the mind as going through a sequence of conscious ideas, one at a time. Instead, a great deal happens at once.

If something can be recalled then it must be important

This is called the “availability heuristic” and makes us misjudge the likelihood of an event due to being able (or not being unable) to easily bring up examples of a similar event from memory. Examples include child abductions or airplane accidents which are extremely rare but they have widespread media coverage when they happen.

“Boring” medical facts are also less likely to draw public attention and so statistical judgments on them are often flawed:

- Strokes cause almost twice as many deaths as all accidents combined, but 80% of respondents judged accidental death to be

more likely. - Tornadoes were seen as more frequent killers than asthma, although the latter cause 20 times more deaths.

- Death by accidents was judged to be more than 300 times more likely than death by diabetes, but the true ratio is 1:4.

It also gives examples of how to talk about this effect:

- “He underestimates the risks of indoor pollution because there are few media stories on them. That’s an availability effect. He should look at the statistics.”

- “She has been watching too many spy movies recently, so she’s seeing conspiracies everywhere.”

- “The CEO has had several successes in a row, so failure doesn’t come easily to her mind. The availability bias is making her overconfident.”

Familiarity breeds liking

A related concept to the first two is “cognitive ease” in which something that is familiar is more easily believed. That means that truly lies repeated often enough will eventually be believed (including the one that it was Goebbels that said it; it wasn’t).

Things can be artificially made familiar by using a bigger and cleaner font in text, affecting peoples’ mood, or telling stories that draw on desirable associations. The opposite is true as well. A small font makes text less appealing to read, and thus weakens the message.

Jumping to conclusions

Causal stories are much more attractive to the associative machine than treating raw data at face value. This is valuable since lets us deal confidently with ambiguous circumstances. But sometimes we’re seeing patterns where there are none or looking for causal links when faced with pure randomness.

One interesting example of this is the “halo effect” in which an impression made through some trait carries over to judgements of that same person’s other traits. Examples: “he looks the part”, “he was asked whether he thought the company was financially sound, but he couldn’t forget that he likes their product”.

Framing

The same information presented using different phrasing might sound much different to humans (e.g. not econs). One example I most resonated with were the following two equivalent sentences:

- The one-month survival rate is 90%.

- There is 10% mortality in the first month.

Turns out if physicians were presented with the first sentence, 84% opted in favor of the treatment. Among physicians presented with the second sentence, 50% opted against the treatment.

So clearly some language causes emotional reactions in the listener that lead to loss aversion or overconfidence. Similarly, units of measure might be misleading. A good example from the book:

- Adam switches from a gas-guzzler of 12 mpg to a slightly less voracious guzzler that runs at 14 mpg.

- The environmentally virtuous Beth switches from a 30 mpg car to one that runs at 40 mpg.

Suppose both drivers travel equal distances over a year. Who will save more gas by switching?

Intuitively Beth’s action is more significant both in the number difference and in the percentage change. But that doesn’t mean the actual savings follow. If the two car owners both drive 10,000 miles, Adam will reduce his consumption from 833 gallons to 714 gallons, saving 119 gallons. Beth’s use of fuel will drop from 333 gallons to 250, saving “only” 83 gallons.

The most important thing about framing is that even trained professionals are susceptible to it. It takes caution and discipline to reframe what you’re hearing to balance out the framing effect.

Base-rate neglect

Stereotypes influence judgement on how likely it is for an event to occur, even if we know that the rate of the effect confirmed by the stereotype is much lower than the base rate (a different explanation). From the book:

If you must guess whether a woman who is described as “a shy poetry lover” studies Chinese literature or business administration, you should opt for the latter option. Even if every female student of Chinese literature is shy and loves poetry, it is almost certain that there are more bashful poetry lovers in the much larger population of business students.

“This startup looks as if it could not fail, but the base rate of success in the industry is extremely low. How do we know this case is different?”

Linda

The most bizarre example of this is the “Linda” experiment where a person was described as a bright thirty-one years old, single, outspoken woman who majored in philosophy, deeply concerned with issues of discrimination and social justice.

The audience of the experiment was asked to rank how likely it was for Linda to be:

- a teacher in elementary school;

- a clerk in a bookstore who also takes yoga classes;

- active in the feminist movement;

- a psychiatric social worker;

- a member of the League of Women Voters;

- a bank teller;

- an insurance salesperson; or

- a bank teller and is active in the feminist movement.

The problem sets up a conflict between the intuition of representativeness and the logic of probability. Linda sounds like a stereotypical bookstore worker taking yoga classes, but judging from the occupancy base rates, it’s much more likely for her to be a bank teller. However, stereotypical bank tellers don’t “look like” radical feminists which further lowers our inclination to mark Linda as a bank teller.

But the true twist is this: the probability that Linda is a feminist bank teller must be lower than the probability of her being a bank teller. When you specify a possible event in greater detail you can only lower its probability. The set of bank tellers is larger than the set of feminist bank tellers. And yet we’re intuitively drawn to choosing she’s a bank teller active in the feminist movement.

Relatedly, when rating the following events, we’d intuitively judge the latter as more likely because it sounds more plausible due to it being more specific:

- A massive flood somewhere in North America next year, in which more than 1,000 people drown.

- An earthquake in California sometime next year, causing a flood in which more than 1,000 people drown.

This is contrary to basic logic.

Green cab drivers are a collection of reckless mad men

A cab was involved in a hit-and-run accident at night. Two cab companies, the Green and the Blue, operate in the city. You are given the following data:

- 85% of the cabs in the city are Green and 15% are Blue.

- A witness identified the cab as Blue.

- The court tested the reliability of the witness under the circumstances that existed on the night of the accident and concluded that the witness correctly identified each one of the two colors 80% of the time and failed 20% of the time.

What is the probability that the cab involved in the accident was Blue rather than Green? At first it seems like it’s “likely” that it was a Blue cab. But if the first piece of information in the problem was reworded in a way that is indistinguishable mathematically, it feels much different psychologically:

- The two companies operate the same number of cabs, but Green cabs are involved in 85% of accidents.

- The rest as in the previous case.

How likely does it sound now that it was the Blue cab and not the Green? The actual answer in both cases is that chances for each of the two colors are about equal, 41% probability for the Blue cab to be exact, reflecting the fact that the base rate of Green cabs is a little more extreme than the reliability of the witness who reported a Blue cab.

This example illustrates two types of base rates. Statistical base rates are facts about a population to which a case belongs, but they are not relevant to the individual case. Causal base rates change your view of how the individual case came to be. The two types of base-rate information are treated differently by humans.

Talent and luck

Kahneman says his favorite equations are:

success = talent + luck

great success = a little more talent + a lot of luck

He explains that unusually good performance is much more heavily influenced by luck than talent. This explains how a given player might be at the top of their game one season but not that great the very next. It’s “regression to the mean” and it’s normal but we give it misguided causal stories to explain the effect.

For example, when a child is praised after a good sports result, they seem like they’re more likely to disappoint on the next try. But when they’re scolded after a bad result, they seem like they’re more likely to do great on the next try. This leads to a belief that punishment works better than rewards. But in fact in both cases we’re dealing with regression to the mean and the action of the teacher is less influential than they think.

Hindsight is misleading

It’s easy to create a tidy linear success narrative for a company or an actor or a scientist. That ignores the plenty of examples of people who followed similar paths but failed. Because luck plays a large role, the quality of leadership, management practices, and talent cannot be inferred reliably from observations of success. Doing so is simply an illusion of understanding.

A similar related bias is the “outcome bias” when a decision is judged by its result (success or failure). Instead, it should be judged given the knowledge the deciding actor had at the time. Good decisions might still lead to bad outcomes, and insane decisions might still lead to lucky victories. The complicated thing about this is that experts who are reckless or use irrational inputs as data when making decisions will be praised as long as their decisions lead to good outcomes. They become heroes. And sadly the experts who behave in a more careful manner because they understand that they’re operating within a complicated world with limited information, are judged to be weak and indecisive.

Optimism leads to overconfidence

Some people are also more optimistic than it’s warranted. They’re drawn to entrepreneurship. Most of them estimate their chance of succeeding at over twice as high as the true average is. An interesting statistic comes from the Canadian Inventor’s Assistance Program which gives inventors an objective assessment of the commercial prospects of their idea. Almost half of them after receiving information that their idea is hopeless continue to work on it, and in consequence lose twice as much money on it than they would if they quit the moment they received the evaluation. That’s despite the forecasts being very accurate: out of 411 evaluated projects only 5 reached commercialization and none were successful in the market.

The people who have the greatest influence on the lives of others are likely to be optimistic and overconfident, and to take more risks than they realize.

We might be able to partially tame optimism with a premortem:

Imagine that we are a year into the future. We implemented the plan as it now exists. The outcome was a disaster. Please take 5 to 10 minutes to write a brief history of that disaster.

Trust the algorithm

Kahneman brings up the story of the Apgar test and explains that trusting algorithms is usually much better than allowing people to make arbitrary decisions based on the false notion of each case being different and “an expert’s personal touch”.

He goes on to explain how he developed an algorithm for the interview process for combat duty in the Israeli Deference Forces in the Fifties. He focused on standardized factual questions about the candidate’s past which he called “measurements”. He designed them to combat the “halo effect” and other biases. The last question was different, it was catering to the previous intuitive selection process ("close your eyes, try to imagine the recruit as a soldier, and assign him a score on a scale of 1 to 5"). The new system was a success, and the last question turned out to also be a good predictor of a candidate’s success. Kahneman writes:

Intuition adds value even in the justly derided selection interview, but only after a disciplined collection of objective information and disciplined scoring of separate traits. Do not simply trust intuitive judgment—your own or that of others—but do not dismiss it, either.

Importantly, even an expert’s intuition is often useless on its own if used carelessly. But when preceded by careful evaluation, “intuition” just becomes “pattern matching” which obviously favors practical expertise. To judge somebody’s experience it’s therefore important to understand the amount of practice that person had and thus how much feedback they’ve been receiving.

He brings up the example of a firefighter commander who intuitively decided to evacuate the team hosing a house’s kitchen through a “sixth sense of danger”. The floor collapsed almost immediately after the firefighters escaped. It turned out that he recognized that there was something different about this particular fire through it being more silent and hotter than usual. The heart of the fire was in the basement below.

We’re terrible at planning

We are unrealistically close to best-case scenarios when estimating effort and task duration. We can combat this by consulting the statistics of similar cases.

“He’s taking an inside view. He should forget about his own case and look for what happened in other cases.”

“Suppose you did not know a thing about this particular legal case, only that it involves a malpractice claim by an individual against a surgeon. What would be your baseline prediction? How many of these cases succeed in court? How many settle? What are the amounts? Is the case we are discussing stronger or weaker than similar claims?”

Prospect Theory

The fourth part of the book is focused on how people make choices.

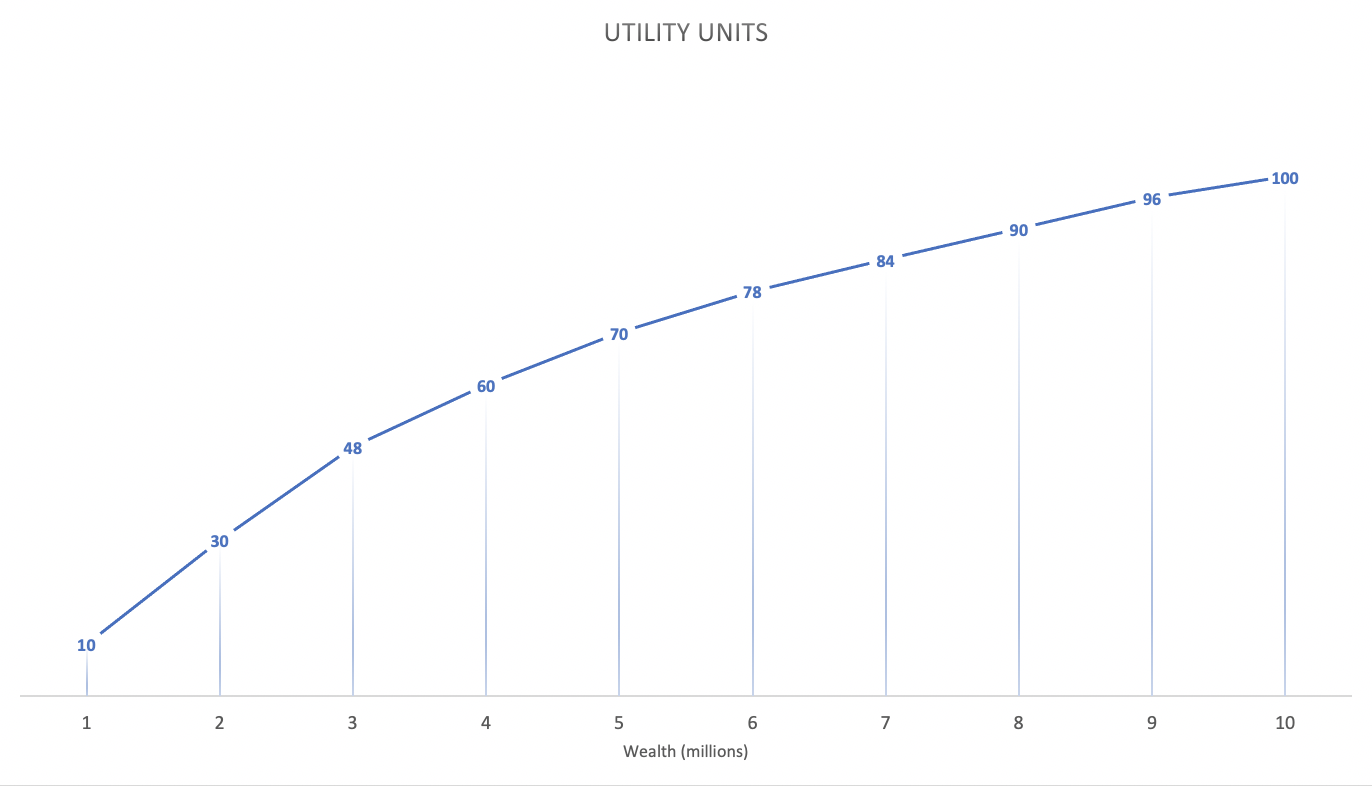

It starts off with demonstrating flaws in Daniel Bernoulli’s utility theory. Bernoulli observed that most people dislike risk (the chance of receiving the lowest possible outcome), and if they are offered a choice between a gamble and an amount equal to its expected value they will pick the sure thing. His idea was that people’s choices are based not on dollar values but on the psychological values of outcomes, their utilities. The psychological value of a gamble is therefore not the weighted average of its possible dollar outcomes; it is the average of the utilities of these outcomes, each weighted by its probability. For example, let’s apply utility units to wealth (note that there’s diminishing returns the higher the wealth):

Let’s say there’s two choices:

- equal chances to end up with either 1 million dollars or 7 million dollars; or

- have 4 million dollars with certainty.

Utility calculates that option one is (10+84)/2 = 47 whereas option two is 60. Therefore most people would choose option two. That’s good insight. It also demonstrates that losses loom larger than gains: when owning 5 million dollars, losing a million dollars removes 10 utility units whereas gaining a million dollars adds just 8 utility units.

However, the theory doesn’t differentiate gains and losses enough, which leads to results inconsistent with psychological reality of humans. Let’s see this with an example. Anthony’s current wealth is 1 million. Betty’s current wealth is 4 million. They’re both faced with the same choice:

- equal chances to end up owning 1 million or 4 million; or

- own 2 million for sure.

According to Bernoulli, they should both make the same choice since their expected wealth is 2.5 million with the gamble vs 2 million for the sure-thing option. This doesn’t take into account reference points. In reality, Anthony can either double his wealth for sure, or maybe quadruple it (risking getting nothing). Betty is losing regardless of the option chosen, with option 1 it’s a certain loss and with option 2 it can be either a worse loss or saving her current wealth. Anthony is more likely to take the sure thing whereas Betty is likely to gamble as this is an opportunity to save her wealth.

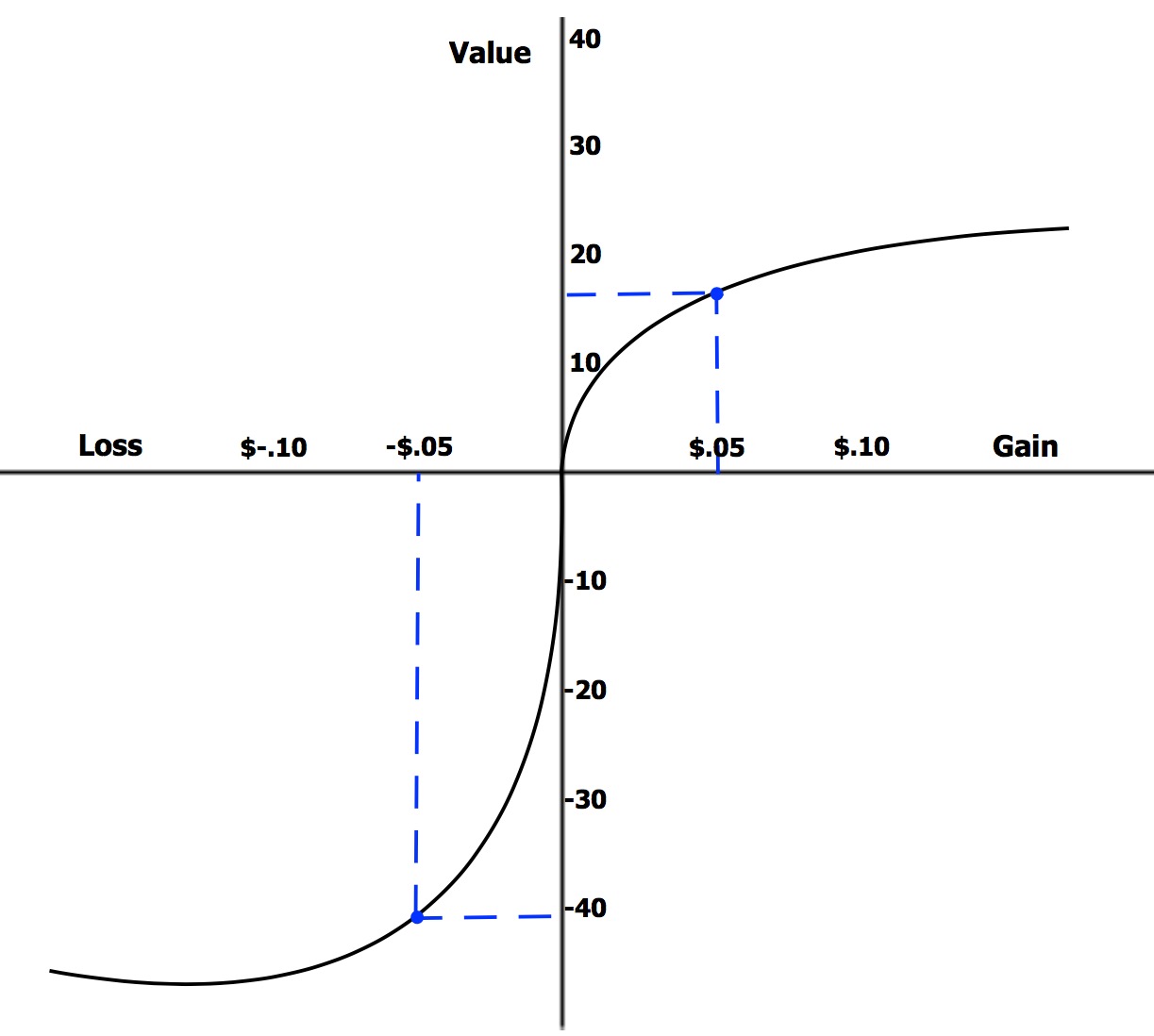

When you can only gain, you’re risk-averse. When you face options that are all bad, you’d rather take the risk, you are risk-seeking. The theory explaining this is called prospect theory and it’s mostly known due to its demonstration of loss aversion:

We’re valuing losses to be more impactful than gains of the same monetary value. This leads to the fourfold pattern:

| GAINS | LOSSES | |

| HIGH PROBABILITY | risk averse fear of disappointment |

risk seeking hope to avoid loss |

| LOW PROBABILITY | risk seeking hope of large gain |

risk averse fear of large loss |

Both scenarios where we tend to be risk averse are about fear. Say there’s 95% probability to win $10,000 and consequently 5% probability of getting nothing. If someone proposes us getting $9,500 for sure, we’re likely to agree to the safe option to avoid disappointment. That’s the high probability gains scenario. Similarly, in a low probability losses scenario where there’s a 5% chance of losing $10,000, we’re likely to agree to settle to lose $500 for sure just to avoid the low risk of a large loss.

Interestingly, for an exposed actor who is likely to face similar dilemmas often, it pays better to not relent and accept low risks. Even in case of a single freak loss, this will save the settlement costs for all the other cases. This makes sense as company policy ("never settle frivolous lawsuits”, “never buy extended warranty") but surprisingly this is also the exact case of buying insurance.

This is additionally complicated by the endowment effect where people are more likely to retain something they own than acquire that same thing when they do not own it. We can combat this thinking by explicitly saying to “think like a trader” where “you win some, and you lose some”. In other words, when you treat losses as costs.

In any case, we shouldn’t focus on a single scenario, or we will overestimate its probability. Let’s set up specific alternatives and make the probabilities add up to 100%.

“Sell high, buy low” can make you poorer

There’s a great example of this in the book where mental accounting interferes with our own success. Namely, we’re tempted to sell stock we own when it’s high because this settles the score, we feel like we’re good investors. Moreover, we’re likely to keep stocks that are hopeless just because settling the score at a loss would mean we’re bad investors.

But the past is in the past. It shouldn’t matter to our choices, only the current performance and current opportunities. Hence, cutting losses and keeping the winners is a more sensible approach for long-term success. Kahneman most of all warns that traders who tend to micromanage their investments tend to lose. Terry Odean measured that over a 7 year period, on average, the shares that active traders sold (e.g. thought are overpriced) did better than those they bought (e.g. thought were underpriced), by a margin of 3.2pp per year. The most active traders have to poorest results. Kahneman says:

Billions of shares are traded every day, with many people buying each stock and others selling it to them. The buyers think the price is too low and likely to rise, while the sellers think the price is high and likely to drop. The puzzle is why buyers and sellers alike think that the current price is wrong. What makes them believe they know more about what the price should be than the market does? For most of them, that belief is an illusion.

On marriage

In a few places in the book there’s some funnily simplistic hindsight around predicting the success of marriage. One is Robyn Dawes’ formula:

frequency of lovemaking minus frequency of quarrels

Another is John Gottman’s observation that the long-term success of a relationship depends far more on avoiding the negative than on seeking the positive. In fact, he estimated that good interactions should outnumber bad interactions by at least 5 to 1 because of the negativity dominance (we tend to give more weight to suffering than to pleasure).

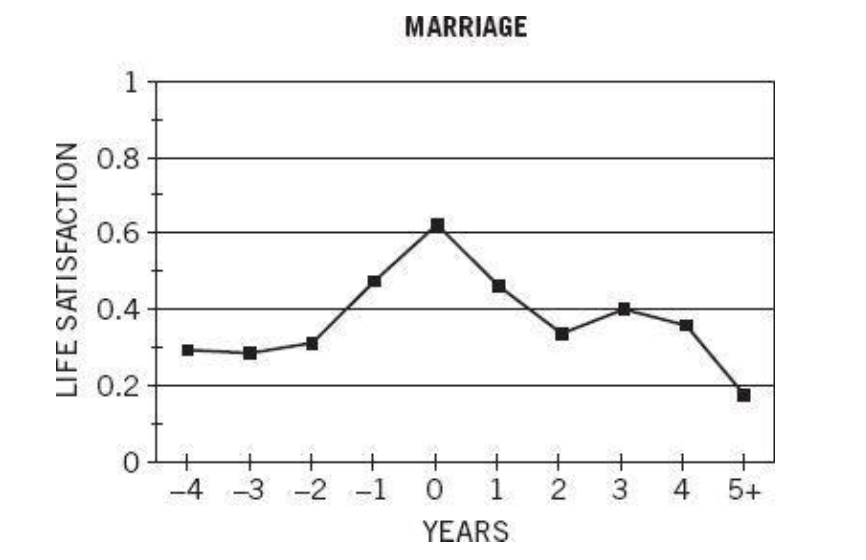

There’s also a graph of reported level of satisfaction around the time of getting married:

Kahneman explain that the decision to get married reflects, for many people, a massive error of affective forecasting. On their wedding day, the bride and the groom know that the rate of divorce is high and that the incidence of marital disappointment is even higher, but they do not believe that these statistics apply to them.

But we also need to be skeptical about the respondents’ ability to judge their life satisfaction because System 1 so often invisibly replaces hard questions with easier ones. Since marriage is an important part of life, for many people the question might be a cue to rate their entire livelihood. People who are about to get married obviously are happy about the fact (most of the time), their memories of the event are also vivid shortly after. Later, it fades and “marriage” stops being recognized as a discrete component of life.

Importantly, it turns out that experienced well-being is on average unaffected by marriage. It’s not because marriage makes no difference to happiness but because it changes some aspects of life for the better and others for the worse. There’s advantages to marriage (less time alone, financial security, regular sex) but also disadvantages (less time with friends, less independence, housework, caring for children, preparing food).

Elsewhere, he also points out that when spouses were asked, “How large was your personal contribution to keeping the place tidy, in percentages?”, and about “taking out the garbage,” “initiating social engagements,” etc. their self-assessed contributions typically added up to more than 100%. The explanation is availability bias: both spouses remember their own individual efforts and contributions much more clearly than those of the other, and the difference in availability leads to a difference in judged frequency.

At some point he also explains that the sentence “Highly intelligent women tend to marry men who are less intelligent than they are.” doesn’t have a causal story behind it. Rather, it’s due to the fact that there is no perfect correlation between intelligence scores of spouses, and that makes it inevitable that highly intelligent women will marry men who are on average less intelligent than they are. There is no controversial story to be drawn here.

Finally, he calls remarrying people overly optimistic, a “triumph of hope over experience”.

Two Selves

I am my Remembering Self and the Experiencing Self who does my living is like a stranger to me.

It turns out that even though we mostly live in the now, we much favor memories over what is actually happening. There are many examples of this in the book, revolving around two traits of the human mind:

- duration neglect: we don’t include the duration of an event when rating it;

- peak-end focus: when remembering an event, we put much more weight to its most vivid moment as well as how it felt at its end.

This influences how divorcees feel about their marriages, how patients feel about painful procedures, how old people think about their life’s happiness, etc. When choosing future events, it matters more to us how we think we will remember them, rather than how they will feel in the moment.

In intuitive evaluation of entire lives as well as brief episodes, peaks and ends matter but duration does not. Adding 5 “slightly happy” years to a very happy life causes a substantial drop in evaluations of the total happiness of that life.

Kahneman isn’t infallible either

As he points out many times in the book, even experts trained in all the effects and traits of the human mind are still susceptible to its quirks. He admits that the usefulness of the knowledge in the book is mostly through raising alertness that we might be less rational agents than we think we are. He concludes that

Organizations are better than individuals when it comes to avoiding errors, because they naturally think more slowly and have the power to impose orderly procedures.

Confirming his realistic assessment of the situation, a number of studies listed in the book have been swept by the recent replication crisis which focuses on demonstrating that an alarming rate of scientific findings can’t be replicated. One example of this I’m particularly fond of are the impossibly hungry judges.